The situation becomes even worse though when you consider the number of sites that link to each other. As News Editors around the world are searching for stories to post they browse other sites for something interesting to write about. One they identify something that they like they quickly edit it, throw in some personal observations, and push it out to the world. This creates and effect I like to call the repeated truth. The repeated truth is when something is repeated so many times it becomes “fact” to many people. I have watched this happen with everything from Windows Vista to Apple patents. If someone hears or reads something often enough and from “trusted” sources it becomes a fact for them. This is not an unusual thing to see and happens in the regular press as much as in the technical press world. People trust the sites and channels they read or listen to, they expect their favorite authors to due diligent research and cover all of the angles. Sadly in many cases this does not happen and there is little to no confirmation of data reposted as “news”.

So what are we to make of all of the press about Samsung “cheating” on their benchmarks? Well there is a little history behind all of the hubbub and also a bit of sensationalizing the situation that has either been missed or ignored. Still here is the timeline as it stands now:

A post was made on Beyond3D’s form claiming that the new Global Edition of the Samsung Galaxy S4 was found to artificially increase the GPU speed from 480Mhz to 533Mhz for two commonly used Benchmarks. AnTuTu, Quadrant, and GLBenchmark version 2.5.1 appeared to be able to access the GPU speed of 533Mhz while games were limited to 480Mhz.

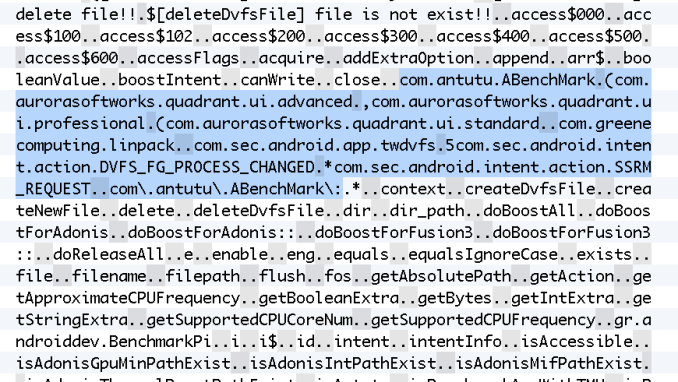

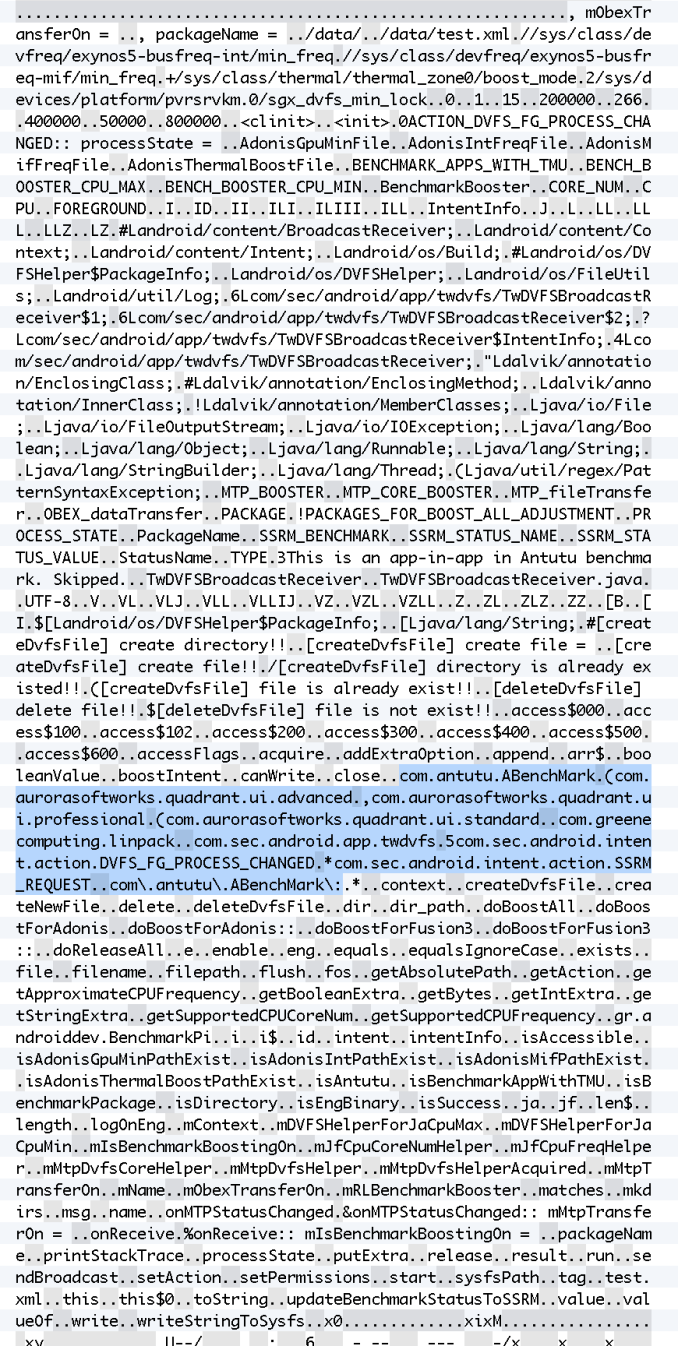

From there Anandtech grabbed the thread and ran with it. In what appeared to be a thorough article with plenty of detail Anandtech dove deeper into the issue and found what looks like hard coded optimizations for benchmarking apps including the ones listed above. One of their biggest pieces of evidence was a section of code that you see below in TwDVFSApp.apk:

Now not that some of the suspected benchmarks are listed right there in a Samsung file. It looks like a version of the old ATi attempt to fix Quake performance by telling the drivers to look for that executable. However, there is a small problem… one of the tests in question, GLBenchmark 2.5.1 is not listed. Not to be deterred we then hear how this optimization code is a broadcast so that is why GLBenchmark 2.5.1 gets a boost even though it is not listed (but GLBenchmark 2.7.1 does not…)

“What's even more interesting is the fact that it seems as though TwDVFSApp seems to have an architecture for other benchmark applications not specifically in the whitelist to request for BenchmarkBoost mode as an intent, since the application is also a broadcast receiver.

6Lcom/sec/android/app/twdvfs/TwDVFSBroadcastReceiver$1;

6Lcom/sec/android/app/twdvfs/TwDVFSBroadcastReceiver$2;

?Lcom/sec/android/app/twdvfs/TwDVFSBroadcastReceiver$IntentInfo;

4Lcom/sec/android/app/twdvfs/TwDVFSBroadcastReceiver;

boostIntent

5com.sec.android.intent.action.DVFS_FG_PROCESS_CHANGED

*com.sec.android.intent.action.SSRM_REQUEST”

So despite the fact that the code does call out specific items it also looks like it is listening for other applications. Now correct me if I am wrong, but isn’t that what a performance profile it supposed to do? This code appears to be listening for applications that fit certain profiles and giving them access to higher CPU and GPU clocks as needed. In this initial article there is nothing to qualify the behavior of these applications or to confirm that other applications (stock or installed) have access to or use this performance profile. Instead the focus is on the “key” pieces of code. In any test or investigation you have to rule out possibilities or you are guilty of concluding the assumption. This means you find the evidence you need to make your assumption correct which is not very good science as it eliminates the possibility that your assumption is wrong; you have prejudged the outcome and are not looking to find any contrary evidence.

Samsung Replies with a Denial -

After publication and these article making the rounds Samsung replied with a denial that they were cheating with these benchmarks or attempting to artificially increase scores in these tests. However, as happens in far too many cases, the denial was seen as confirming the original claims. Many sites used the ambiguous statement “a maximum GPU frequency of 533MHz is applicable for running apps that are usually used in full-screen mode, such as the S Browser, Gallery, Camera, Video Player, and certain benchmarking apps, which also demand substantial performance.” as proof that Samsung was admitting guilt.

Now remember the original problem was that games were did not have access to this speed, but that two benchmarks appeared to also at this point no one has check to see if any other applications can access the 533MHz maximum speed of the GPU. The focus has been on showing that Samsung is cooking the benchmarks. Nothing has been done to qualify the performance window set out by Samsung. The whole statement from Samsung about the issue is below:

“Under ordinary conditions, the GALAXY S4 has been designed to allow a maximum GPU frequency of 533MHz. However, the maximum GPU frequency is lowered to 480MHz for certain gaming apps that may cause an overload, when they are used for a prolonged period of time in full-screen mode. Meanwhile, a maximum GPU frequency of 533MHz is applicable for running apps that are usually used in full-screen mode, such as the S Browser, Gallery, Camera, Video Player, and certain benchmarking apps, which also demand substantial performance.

The maximum GPU frequencies for the GALAXY S4 have been varied to provide optimal user experience for our customers, and were not intended to improve certain benchmark results.

Samsung Electronics remains committed to providing our customers with the best possible user experience.”

Before this statement was released no one went looking for the code that caps the games to 480MHz or to identify any other apps that could hit 533MHz. They only went looking for anything that would “prove” an artificial boost to these benchmarks. There is nothing that proves guilt in this statement and to be honest there is nothing in the TwDVFSApp.apk that shows guilt of intentionally cooking benchmarks on the Galaxy S4 Global Edition (although it does not look good for Samsung).

Still Anandtech needed to defend their reputation. They went back in another article and attacked the claims by Samsung (even using the comment that the above statement is admission of guilt). The tested the listed applications and found that the only one that was able to hit 533MHz was the camera app and that was only spikes. As there was not a detailed listing of the methodology used to test these apps under this profile we do not know how these results were obtained. Still most see this as further proof that this is only for making benchmarks go faster on the Galaxy S4 Global Edition.

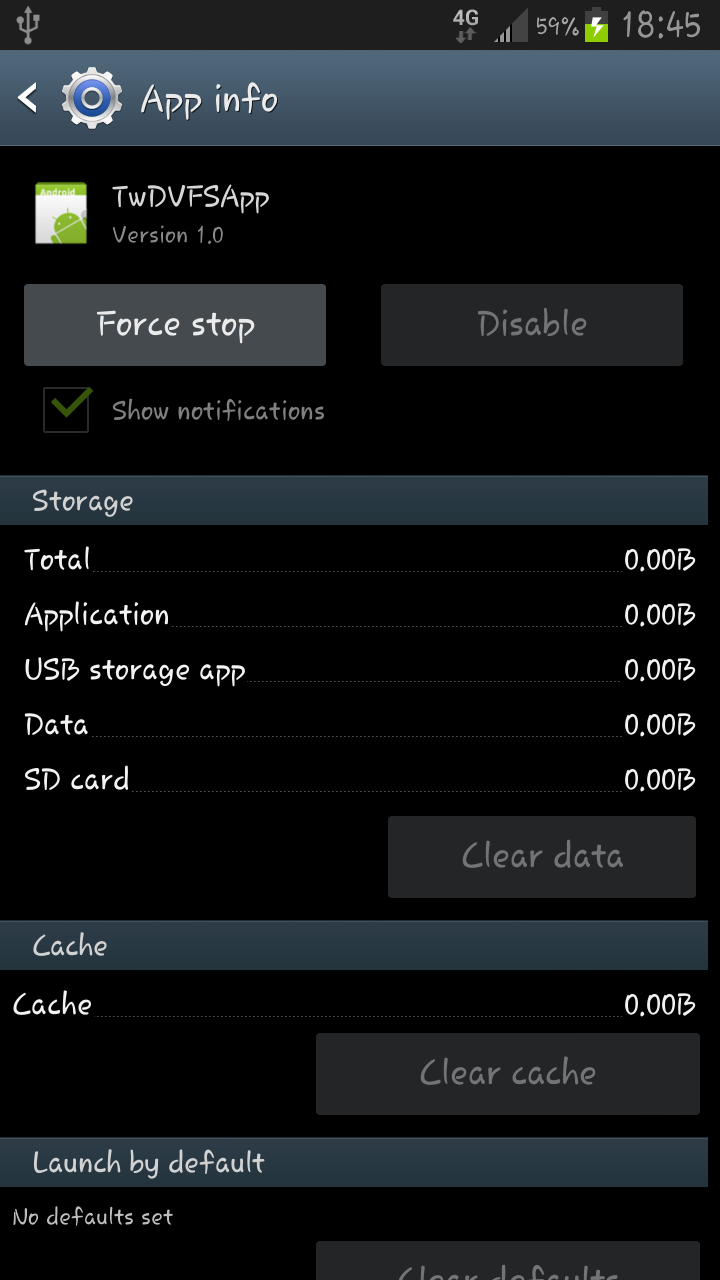

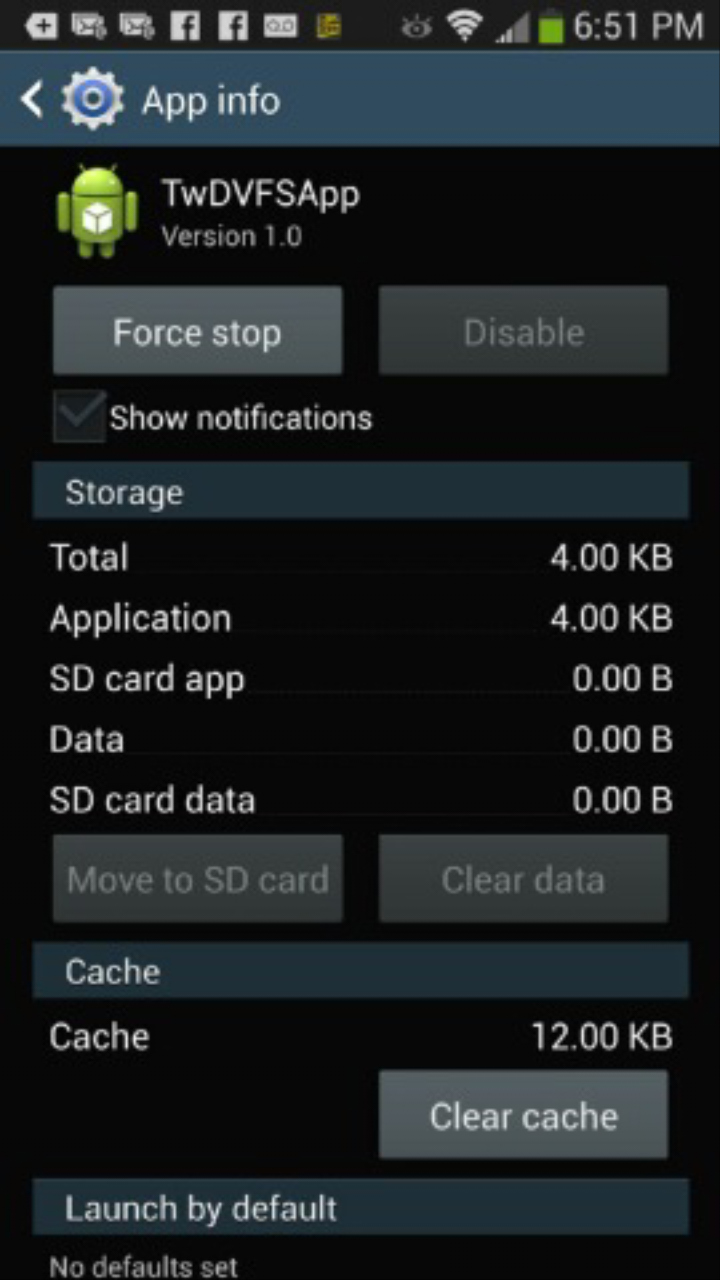

We took a look at our Note II and found it sitting idle on the device while our US version of the Galaxy S4 also had this file, but it was running (see screenshots).

|

|

Does this mean that the version on the S4 GE that Anand and others are testing has a different version of this file or is it simply pushed across the whole family just used differently due to different hardware specifications? This is a question that so far has not been answered by any of the publications that are calling foul on the S4 GE. However they do admit that so far only the S4 GE shows this behavior. So it would seem that not enough information was gathered before rendering a verdict on this topic. As a good example of this is the fact that the twDVFSapp.apk file also pulls from an Android system framework file called android.os.DVFSHelper.PACKAGES_FOR_BOOST_ALL_ADJUSTMENT that resides in /system/framework2/framework2.odex. Some of the applications there are:

com.aurorasoftworks.quadrant.ui.standard

com.aurorasoftworks.quadrant.ui.advanced

com.aurorasoftworks.quadrant.ui.professional

com.redlicense.benchmark.sqlite

com.antutu.ABenchMark

com.greenecomputing.linpack

com.glbenchmark.glbenchmark25

com.glbenchmark.glbenchmark21

ca.primatelabs.geekbench2

com.eembc.coremark

com.flexycore.caffeinemark

eu.chainfire.cfbench

gr.androiddev.BenchmarkPi

com.smartbench.twelve

com.passmark.pt_mobile

This file appears to be an Android core file and not a Samsung file. It would be indicative that these optimizations are common across the Android Platform (after all why put some of these benches in twice?). It also explains the mystery of why GLBench 2.5.1 gets a boost even though it is not in the twDVFSapp. This little fact was pointed out by a commenter on the first article and but missed by the majority of the technical press.

Stacking the deck -

The concept of optimizing systems for specific applications is nothing new. Developers have been doing it for years. I can remember when Lightwave would not run properly on AMD CPUs due to code that looked for an Intel CPU. We have seen games that will respond to one GPU differently than another (Borderlands an AA comes to mind) along with many other examples. At the hardware level there are optimizations for particular types of code and code blocks. Just about all CPU and GPU makers have microcode that will increase performance to individual cores depending on the thread demand.

None of these improvements are seen as cheating or stacking the deck even though Intel’s turbo boost system gave them an advantage in benchmarks that are single threaded. There was no outcry that Intel was cooking benchmarks. Instead the focus was on developers to fix an issue that gave a false impression of Intel’s performance. In fact when there was an issue with a benchmark (AnTuTu) and Intel CPUs recently there was not much finger pointing at Intel. Instead the onus primarily fell back on AnTuTu to fix their benchmark so that it was not giving Intel an unfair edge by skipping tests that did not apply to Intel’s x86 SoC. Why was this not more of the focus in this situation? You would think that someone would have taken a look at the actual benchmarks in play here and verify that the optimizations are not in response to tests that do not properly test hardware properly (like AnTuTu).

Anandtech eventually alludes to the fact that synthetic benchmarks are not the best method for testing and clearly state they do not even use the tests at question here:

“None of this ultimately impacts us. We don’t use AnTuTu, BenchmarkPi or Quadrant, and moved off of GLBenchmark 2.5.1 as soon as 2.7 was available (we dropped Linpack a while ago). The rest of our suite isn’t impacted by the aggressive CPU governor and GPU frequency optimizations on the Exynos 5 Octa based SGS4s.”

So why the big push to make this an issue? If this does not impact them or their testing why not spend the extra time to identify what is going on with other phone, other manufacturers and also to better classify what apps can access the performance profile that GLBenchmark and AnTuTu are hitting?

We have an answer for this; it is the desire to get the story out there as fast as possible. When there is an issue like this that pops up on the net there is a time limit before someone gets it out there. Anand and Brian Klug were in a hurry to get this out there. They did do some homework on the issue and we do respect the length they went to, but they did not do all they could to ensure the coverage was fair and impartial. The verbiage was professional and not inflammatory, but the flow of the article was. There is almost no room in their conclusion for any other possibility or conclusion. They did not come out and say Samsung is cheating on their benchmarks, but they might as well had. They only showed the material to prove that claim and nothing else. In fact, until Samsung posted their disclaimer they did not even begin to look at other apps to see what they might be doing.

This is the state that the majority of the tech press is in, everyone is under pressure to get the story and the results out before the other guys. The longer the delay, the less of an impact your article will have. Everyone wants to be the article that other sites link to and Anandtech got that with this one. Sadly they probably would have still gotten this if they had gone the extra step to test other apps and quantify what was going on with GLBenchmark 2.5.1 and AnTuTu.

So who is to Blame?

Samsung screwed up by enabling what looks like an extension for a profile that already existed in Google’s Android. Regardless of the fact that it does allow other apps to perform better, they should have not included bechmarks and tests by name. They should not have done this without some sort of explanation or disclaimer. There are a ton of developer features in Android (and in Samsung’s flavor) so why not point to those when running a benchmark and ask that developers put this information in an FAQ? This would allow someone to see performance in “stock” mode and then in a “benchmark” mode. One gives you the basic performance profile and the other allows the device to push its max limits. It is a simple solution that should have been thought of and allowed for.

Benchmark developers for mobile are also to blame here as their code is not suited to the typical power and performance profiles on most phones. AnTuTu was already shown to not have properly optimized code for all platforms so there is a big possibility that other benchmarks are also inefficient in this regard. It is possible that certain optimizations are needed to allow benchmarks to perform properly. It is not an easy task to develop code that works for all hardware build and Android versions. We know that manufacturers have to spend a considerable amount of time reworking their OS offerings whenever Google pushes out an Android update so why would we expect an app that is written for stock Google to work the same across all builds?

Lastly is the industry and tech press. This group is to blame possibly more than others as they have helped to create the environment that allows for this behavior. The push to get content out as quickly as possible means that corners are sometimes cut and not all of the information is fully investigated. Reviews have also become shorter and less detailed. The focus is on synthetic and scripted tests. These can be performed without much user interaction so the process of the user experience has been removed. There is very little attempt to do real-world testing as reviewers rush product in and out of the lab. This is further fueled by companies that have deadlines for products to be covered and published. These manufacturers and PR Firms also know the value of the news cycle and want their product covered NOW for maximum impact. These two groups have been the driving force behind the change in the way the industry and products are covered. Instead of thorough and fair coverage, now it is all about scandal and being the first publication to publish.

Tell us what you think in our Forum