There is a BOM!

BOM (Build of Materials) references every part that goes into building a specific part. If you were building a Video Card your BOM would include each component right down to the resistors and PCB used in the product. Normally a BOM is only component specific. A BOM might say six layer PSB (Green) or 100 .1 Ohm resistors but it would not go so far as to state the Lot Number, revision number or anything else. If you take a typical stick of memory you might notice that the chips do not always match for lot number. The same can be said for building any component. Companies do not have time to match lot numbers as they build product. In fact most do not even try. They take parts from stock as they are put in. Oddly enough, it is only by chance that you get parts from the same Lot. This happens because when Lots are manufacturer they are packaged for shipment around the same time so these components end up going to the same places. As Lots are usually in the hundreds of thousands there is a good chance that you may get the same Lot parts in a single product.

To a consumer buying a dual or triple channel kit of memory you this means that your chance of getting parts with different Lot components it fairly slim. To the Enterprise customer who buys 16, 32, 48, or more sticks of memory in a single purchase the likelihood goes up dramatically.

It’s our Lot in life…

So why are different Lots bad? To put it simply a Lot is a group of components or products that were all manufactured the same way and at the same time. This is often referred to as a production run. Once that run is complete there can be small changes to the way the next group of pieces are manufactured. This is normal and happens over the manufacturing life of a specific part. This is why you may hear about a recall of a product with serial numbers ending in “XXYYY” those numbers identify the Lot. Now, as we mentioned, there is a normal shifting in a product over its manufacturing lifecycle. This shifting is very gradual and is normally not noticeable to the consumer as parts from adjacent Lots (Lots that were made before and/or after a specific Lot) are usually so close that it makes no difference in performance or stability. Where things begin to go south is when there are massive differences in the Lot numbers (say 10 or more). Then things can get a little weird.

When Tolerance attacks

Now that we have briefly (very briefly) told you about BOMs and Lots let’s talk about why they are important and how they play into what Kingston is doing. Every part has a certain specification (spec) and this spec has a tolerance that is has to meet or it gets tossed into the bin. A tolerance is measured as a plus or minus to the designed spec. For example; if I design a battery and I say it puts out 3.3 Volts that is my Design Spec. Now during manufacture and test we find out that sometimes (depending on the manufacturing run) they put out 3.35Volts or 3.26Volts (and still work) their tolerance is +/- .04. This lets you know that anything in that range is acceptable. In electronic and computer components you have the same tolerances. In a single Lot you have that tolerance built in and it forms the standard deviation for manufacture. During the manufacturing lifecycle of a product this deviation can drift one way or the other. Again adjacent lots are usually so close you would never know but Lots that are significantly separated may have a deviation that are just too far to work together. You end up with the same parts in terms of design but the tolerances are so diametric they just won’t work together. In another example let’s say a voltage regulator is designed to 3.3V the tolerance is +/- .05. In lot A the regulators are all at 3.31 in lot B they are 3.29 when you put them together they are only .02 off so no harm done there. Now lot C might be at 3.28 and lot D at 3.27 this means that if you put regulators from Lot A and Lot D together you have a difference of .04 we are pushing the tolerance of these parts when they are put together. Once you are out of tolerance for parts in a grouping you have potential for failure.

What’s this got to do with Kingston?

I was hoping you would ask that. What Kingston has done with this series of memory is to build kits of RAM based on a controlled BOM right down to the SPDs and memory chips. By doing this they are guaranteeing to their prospective customers that the RAM they get will operate together and all within the same manufacturing tolerances. This is an impressive commitment to the market when you stop and think about it. Recently they sent us a perfect example of this type of quality control. We were send 128GB of DDR3 1066 ECC memory (16 8GB Sticks) for a project that spanned workstation testing (with Windows 7 x64 Ultimate) VMware VSphere and some additional server testing.

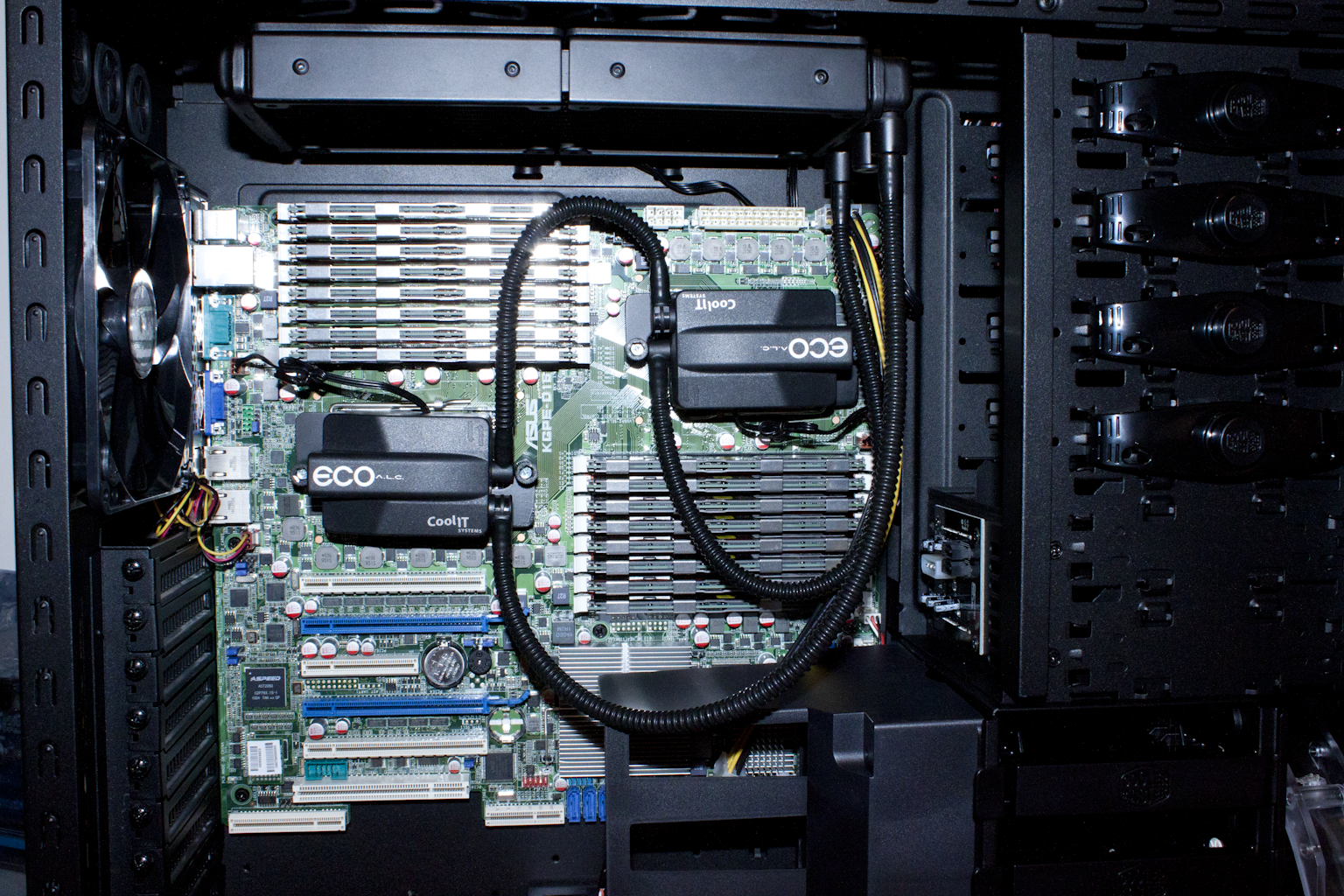

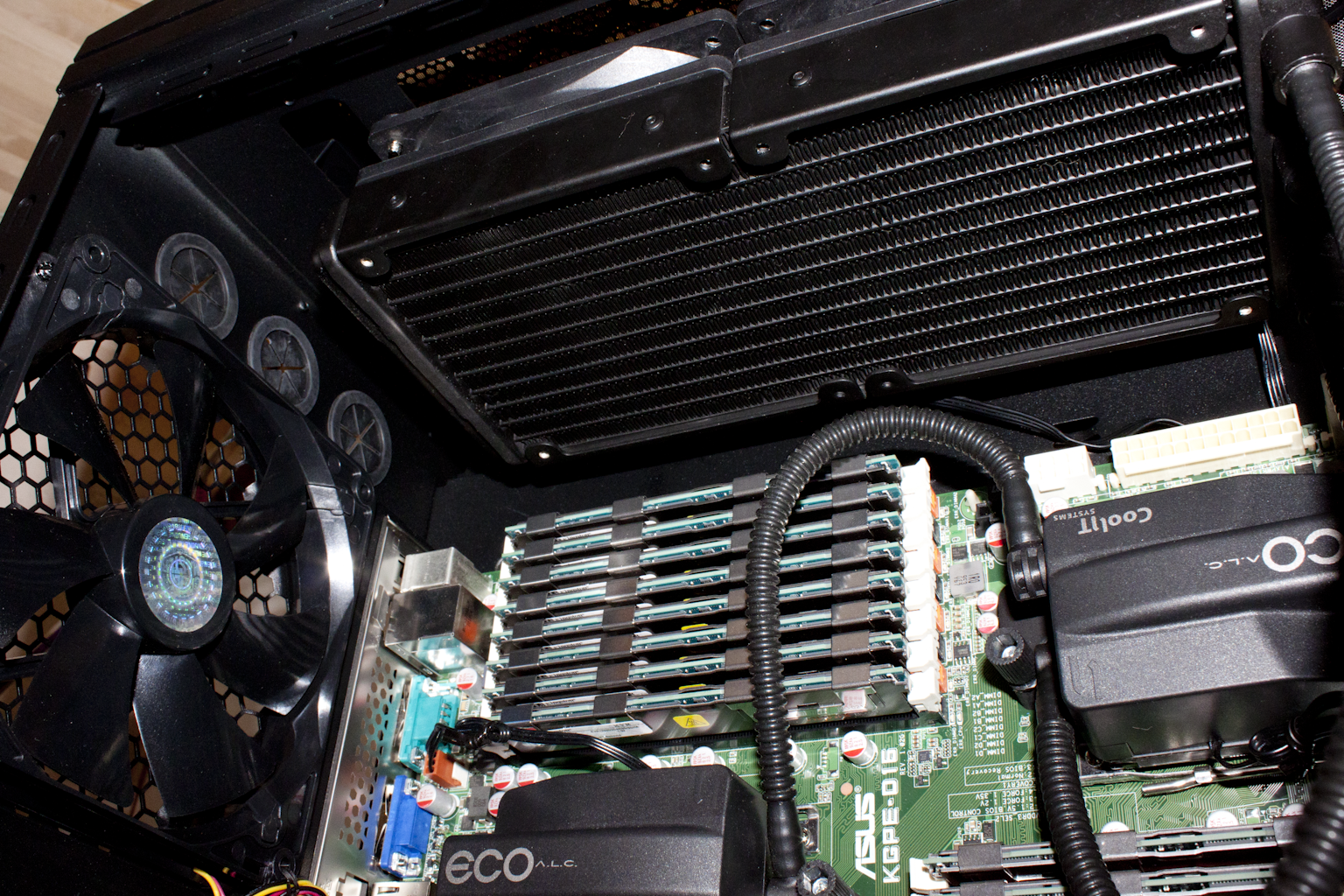

Out tests started off with some heavy workstation lifting on a Dual Magny Cours setup with all 128GB of RAM hanging out in the same system. Now some people have claimed that Windows 7 x64 has trouble when you push it up to that limit. We have suspected that it was more a matter of handling instructions across all of that memory rather than the actual size of the installed memory and it turns out we were correct. The 128GB of Kingston ValueRAM Server Premier handled our full Lightwave battery including a 110-hour render of a 4k sample (Our favorite Pinball scene). This was impressive in more ways than one. We have 128GB of memory supplying 24 physical CPU cores (or roughly 5.3GB per core). As we used Perspective cameras we had heavy ray tracing while the 2x AntiAliasing forced even more information across the CPU to Memory Bridge.

|

|

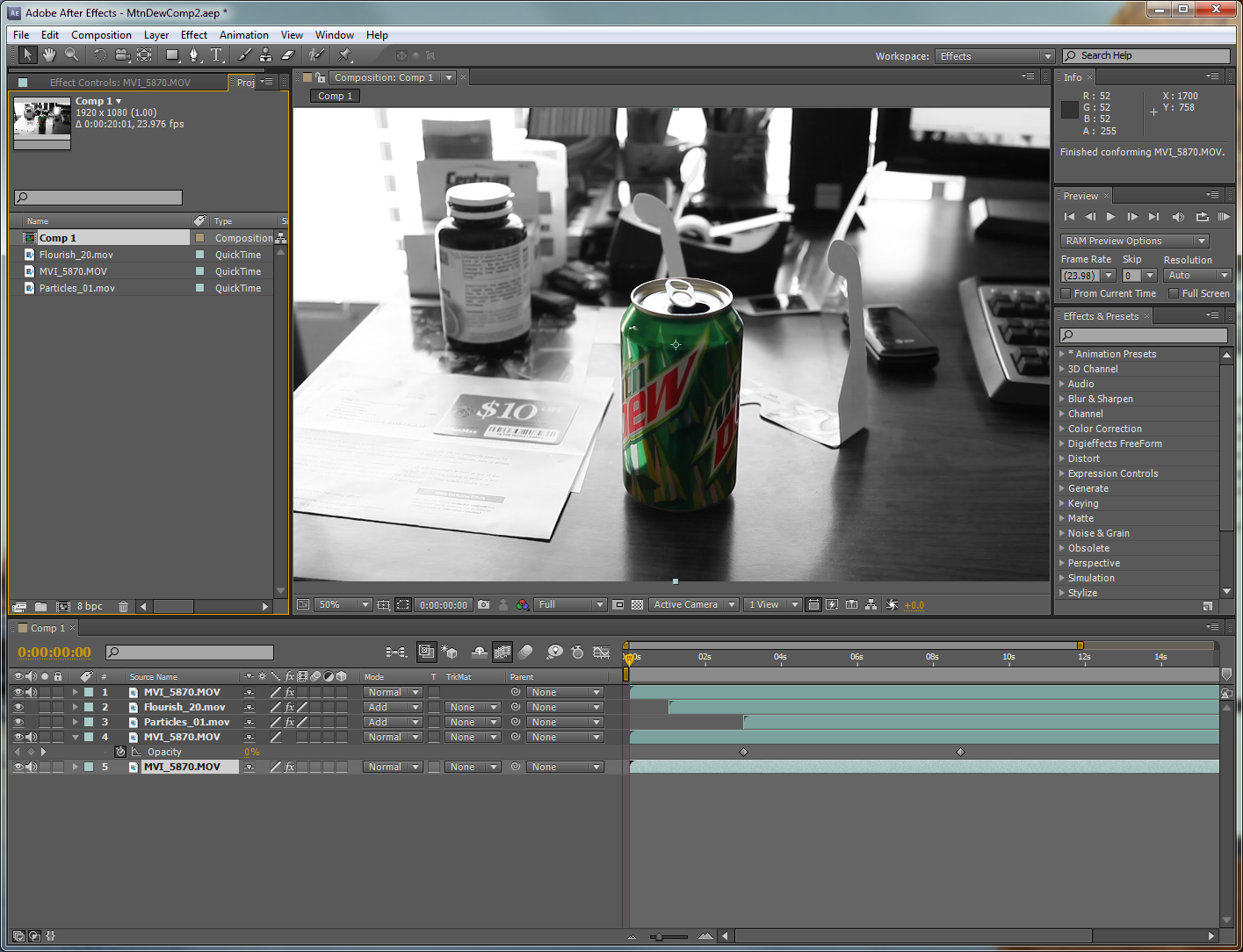

Next up was conversion of 800 RAW images with sizes ranging from 15 to 20MB (15.1 Megapixels). These were pushed to 4752x3168 on a different drive array. This exciting test was followed by a few hours of running Adobe’s After Effects with a project that was donated by Peter Kapas just for this project. We were able to assign just over 2GB of RAM per core for that test. Again the Kingston ValueRAM Server Premier handled this without a glitch.

After that we loaded up a nice installation of Windows 2008 R2 Server Datacenter with HyperV running. Again the Kingston RAM handled the load of roughly 14 Virtual servers (including Exchange 2010, two SQL2008 R2, share point 2010, Xen Desktop HDX and more. We have tried things like this before with other vendors memory (in my work environment) and have witnessed multiple memory related errors or high ECC corrections (which have an effect on performance). After our experiences with Microsoft’s products we pulled out an evaluation license of VMWare’s VShpere 4.1 and went town. Again we ran 14 Virtual servers without any issues at all for the length of the 30 day evaluation.

Of course something like this comes at a cost. Our little test kit would have set us back around $9,000 if it had not been a loaner from Kingston (prices on this were much higher at the time we recieved this). But once again if you are in the Enterprise or professional market this kind of stability (which equals performance) is incredibly valuable. It is also nice to know that Kingston is not charging a premium for this; if you need the extra control and stability, all you have to do is ask for it. I could not imagine having to explain to a client that their SQL database is down because of miss-matched or poorly chosen memory. I would also gladly spend the extra money to make sure that my photographs and video projects will have no issues while I am working on them. There is nothing like having a system lock up while you are working on a 20MB + RAW file because of memory addressing errors or having a render crap out on you for the same reason. I have been through both of these before and can tell you that they are not fun at all.

Which brings me to my conclusion; if you need stability and performance across large amounts of memory (16GB and up) then you will want to seriously consider giving Kingston a call and look into their ValueRAM Server Premier. You won’t be sorry that you did.